Local Docs, Local AI: Chat with PDF locally using Llama 3

- Published on

- Authors

- Name

- Xiaoyi Chen

- @chxy

Chat with PDF offline

RecurseChat is a macOS app that helps you use local AI as a daily driver. When RecurseChat initially launched on Hacker News, we received overwhelming support and feedback.

The app looks great! - ggerganov

Cool, instant buy for me - brigleb

Love this! Just purchased. I am constantly harping on decentralized AI and love seeing power in simplicity. - bradnickel

However, at the time of this launch, it couldn't converse with your local documents. A local AI app isn't complete if it can't talk to local documents.

It's here: RecurseChat now supports chatting with local documents like PDFs and markdown completely locally and offline.

Here is how you can start chatting with your local documents using RecurseChat: Just drag and drop a PDF file onto the UI, and the app prompts you to download the embedding model and the chat model. This only needs to be done once. Now you have an offline ChatGPT for your PDF files. The responses reference the relevant pieces of the original document. Turn off wifi, it still works.

Meta Llama 3

Meta Llama 3 took the open LLM world by storm, delivering state-of-the-art performance on multiple benchmarks. In version 1.0.101, we added support for Meta Llama 3 for local chat completion.

You can chat with your local documents using Llama 3, without extra configuration.

RAG and the Mac App Sandbox

Under the hood, chat with PDF feature is powered by Retrieval Augmented Generation (RAG).

RecurseChat is the first macOS app on the Mac App Store that performs Retrieval Augmented Generation (RAG) completely locally and offline.

"Wait, but I've seen RAG apps running on macOS before. What's new?"

Great question! They are on macOS, but not on the Mac App Store. Is there anything different? Here is why it matters: Mac App Store enforced sandboxing, which means the app runs in a restricted environment.

The files that RecurseChat can have access to are its data folder (For example, the models downloaded, the database the app uses), the files opened by the system dialog, and the files you dragged and dropped onto the UI. Not the same story about non-sandboxed apps. They can access any file on your system, even data in sandboxed apps.

You can verify whether an app is secured by Mac App Sandbox by using codesign tool in the terminal:

codesign -d --entitlements - /Applications/RecurseChat.app | grep com.apple.security.app-sandbox

If the app is sandboxed, you will see an output including com.apple.security.app-sandbox like below:

Executable=/Applications/RecurseChat.app/Contents/MacOS/RecurseChat

[Key] com.apple.security.app-sandbox

Try replacing /Applications/RecurseChat.app with the path to the app you want to check. Chances are, your apps downloaded outside of the Mac App Store might or might not be sandboxed, but apps from your Mac App Store purchases should be sandboxed, as well as system applications such as Safari (/Applications/Safari.app) and QuickTime Player (/System/Applications/QuickTime Player.app).

Historically, Apple has prioritized security in its operating system design. The iOS sandbox was introduced with the launch of iOS in 2007, becoming a core part of the iOS security model. iOS apps by default run under sandboxes, making iOS a highly secure mobile platform. Mac followed the path to introduce sandbox in 2011 with the release of OS X 10.7 Lion.

But because of the diversity of macOS apps, Mac sandbox was only enforced on apps distributed through the Mac App Store. Even Obsidian.md, a privacy-focused app, did not implement App Sandbox. (We absolutely love Obsidian as a tool, and we find ourselves resonating with Obsidian's philosophy, but we think Obsidian could benefit from sandbox security mechanism, especially given the variety of community plugins.)

Although developing a sandboxed app is non-trivial, privacy is of paramount importance as a principle of RecurseChat. We have ensured RecurseChat works inside the app sandbox and still has a smooth UX. The sandbox mechanism serves as a foundation for developing private and personalized AI apps.

RAG stack

Let's go into details of the RAG implementation. Our RAG stack is powered by the following technologies:

Chunking

PDF file is parsed into text content using PDF.js, then chunked using langchain.

Embedding

The chunks are then embedded using llama.cpp embedding model. At this moment, we support FlagEmbedding. In the future, we will support more embedding models.

Since we provide direct access to the underlying llama.cpp server 1, You can also use the API to embed text content of your choice if you are interested.

For example, you can embed text content using the following API call:

curl http://localhost:15242/v1/embeddings \

-H "Content-Type: application/json" \

-H "Authorization: Bearer no-key" \

-d '{

"input": "hello"

}'

Response will be in the following format:

{"data":[{"embedding":[0.0006718881777487695,0.0426122322678566,0.020298413932323456,...]}]}

Vector Database

Vector DB is a highly contested field in RAG applications. Superlinked has a nice comparison table of 38 vector databases to choose from.

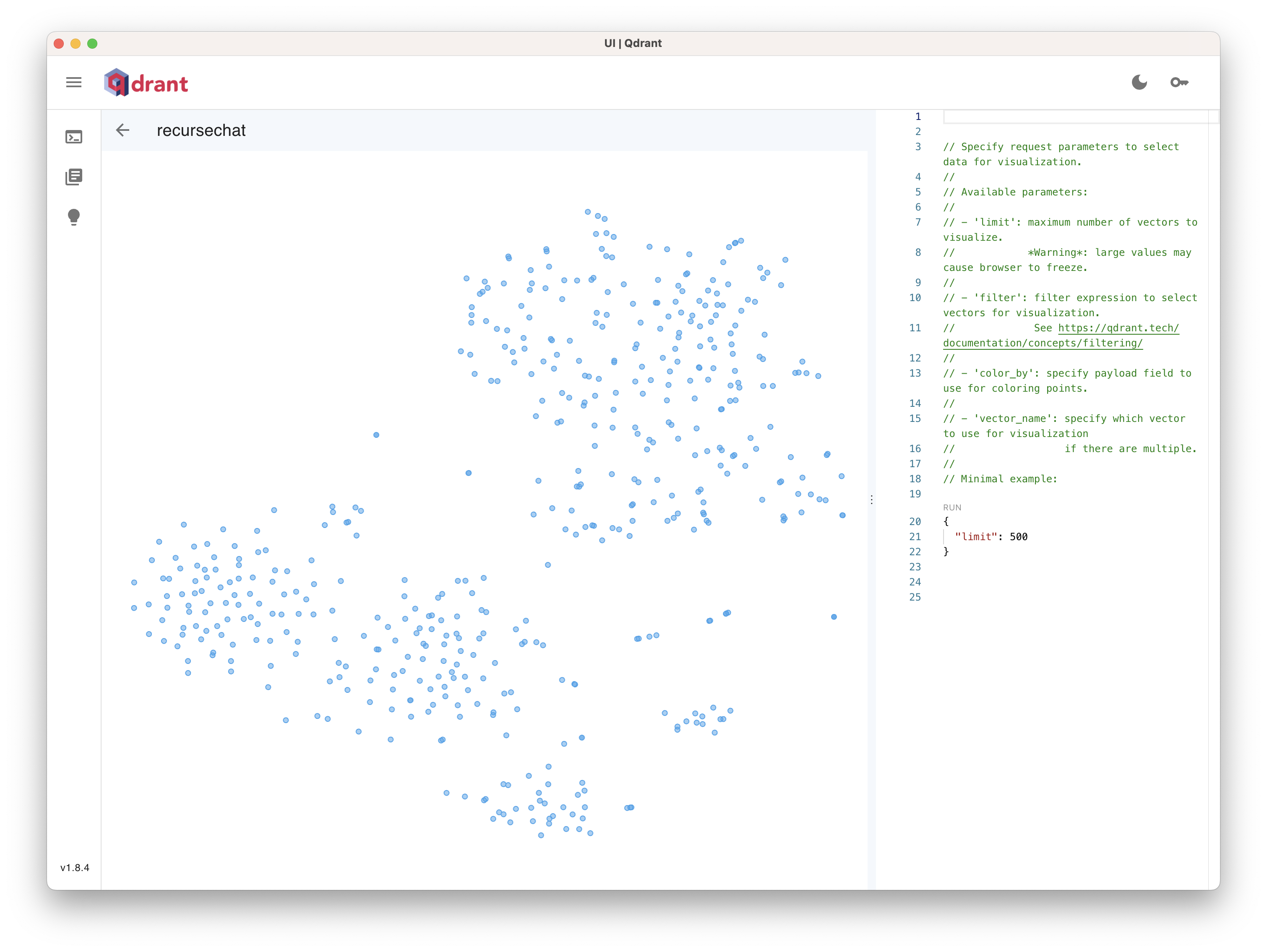

We settled down on Qdrant, a high-performance vector database developed in Rust, for its superior performance 2 and production readiness 3. The vector database runs locally on your machine. Once a document is indexed, we store the embeddings in the vector database. The document does not need to be re-indexed unless it's modified, making follow-up queries fast. When we store the embeddings, we also store the metadata of the document, so that we can provide citations to the original document.

We also offer a couple of convenient shortcuts for managing the local vector database, so you can manage the storage or perform customized queries. You have ownership over your document index.

For example, you can open Qdrant dashboard and visualize your document embeddings:

Augment Query

When you chat with the document, the query is augmented with the context based on the similarity of the query to the document embeddings. The augmented query is then passed to the chat completion model.

Chat completion

For local models, we use llama.cpp for chat completion. If you choose a local model for chat completion, the app works completely offline.

For OpenAI compatible models, we use OpenAI SDK for chat completion. This allows us to support online providers like Groq and Together AI, as well as endpoints that support OpenAI compatible API such as Ollama. If you use an online LLM provider, you don't need to worry about incurring a large embedding cost as you only pay for the chat completion API.

What's next?

Many of our users have quite a few documents that they want as a persistent context of their conversations. We are working on making chatting with your wealth of local documents easier.

We'd also love to hear about your ideal local document workflows and use cases, and how we can make RecurseChat more useful for you. Feel free to reach out to us on Twitter or Email.

Footnotes

You can start a llama.cpp server with

-mparam pointing to your embedding GGUF model and--embeddingto enable embedding. ↩Qdrant Benchmark: https://qdrant.tech/benchmarks/ ↩

According to this comment, Qdrant scales to billions of vectors. ↩