Hugging Face GGUF model support available in RecurseChat

- Published on

- Authors

- Name

- Xiaoyi Chen

- @chxy

Hugging Face Support

RecurseChat now supports adding GGUF models from HuggingFace directly.

Previously, you can download default models from Hugging Face or link your local GGUF file in RecurseChat, which is useful for users who already have models downloaded. Now, you can add a GGUF model of your choice from Hugging Face hub directly. This new capability adds another layer of customization for RecurseChat users who want more control over their AI conversations.

Go to Model Page -> New Hugging Face GGUF Model to create a model directly from a Hugging Face URL.

GGUF metadata

RecurseChat uses the metadata from the Hugging Face model to populate the model details in the RecurseChat interface. This includes the model name and the chat template.

We use @huggingface/gguf to fetch the metadata from the Hugging Face model, and infer a chat template from the tokenizer.chat_template value of model metadata for llama.cpp.

You can fetch the GGUF metadata from a Hugging Face model using the following code:

import { GGMLQuantizationType, gguf } from "@huggingface/gguf";

const url = 'https://huggingface.co/QuantFactory/Meta-Llama-3-8B-Instruct-GGUF/blob/main/Meta-Llama-3-8B-Instruct.Q5_K_M.gguf'

const { metadata, tensorInfos } = await gguf(url);

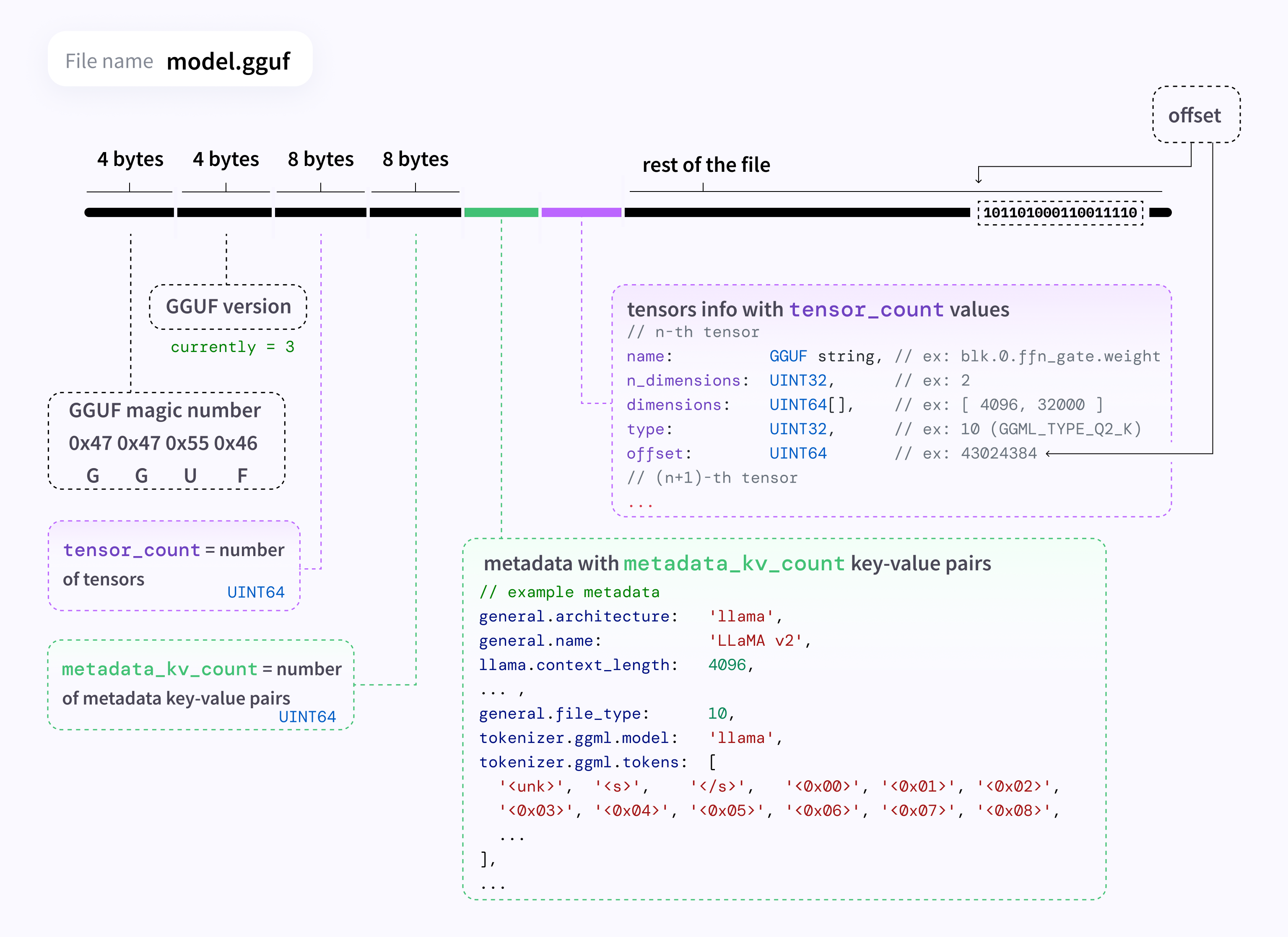

GGUF metadata information is extracted from the front of the GGUF file, as shown in this excellent visualization by Hugging Face:

If you are interested in learning more about the GGUF format, you can check out this Hugging Face guide and this deep dive by Vicki Boykis.

Chat templates

HuggingFace's chat template format is in Jinja, which is different from the chat template that llama.cpp uses. We heuristically infer the chat template similarly to the approach of llama.cpp. In addition to Hugging Face models, we also added support for inferring a chat template for a local GGUF model.

If you want to customize the chat template, you can edit it in Model -> Customize -> Advanced Settings. If you know the model uses a particular preset, you can apply one of the chat template presets supported by llama.cpp.

Deep Link

We also supported deep linking to Hugging Face models. This means you can share your model with others and they can add it to their RecurseChat instance with a single click.

For example, opening recursechat://new-hf-gguf-model?hf-model-id=microsoft/Phi-3-mini-4k-instruct-gguf will open the RecurseChat app and add the model directly.

Replaced hf-model-id with the Hugging Face model ID (owner/repo) you want to add.

We'd love to hear about your use cases for HuggingFace models, and how we can make RecurseChat more useful for you. Feel free to reach out to us on X or Email.