Chat with PDF

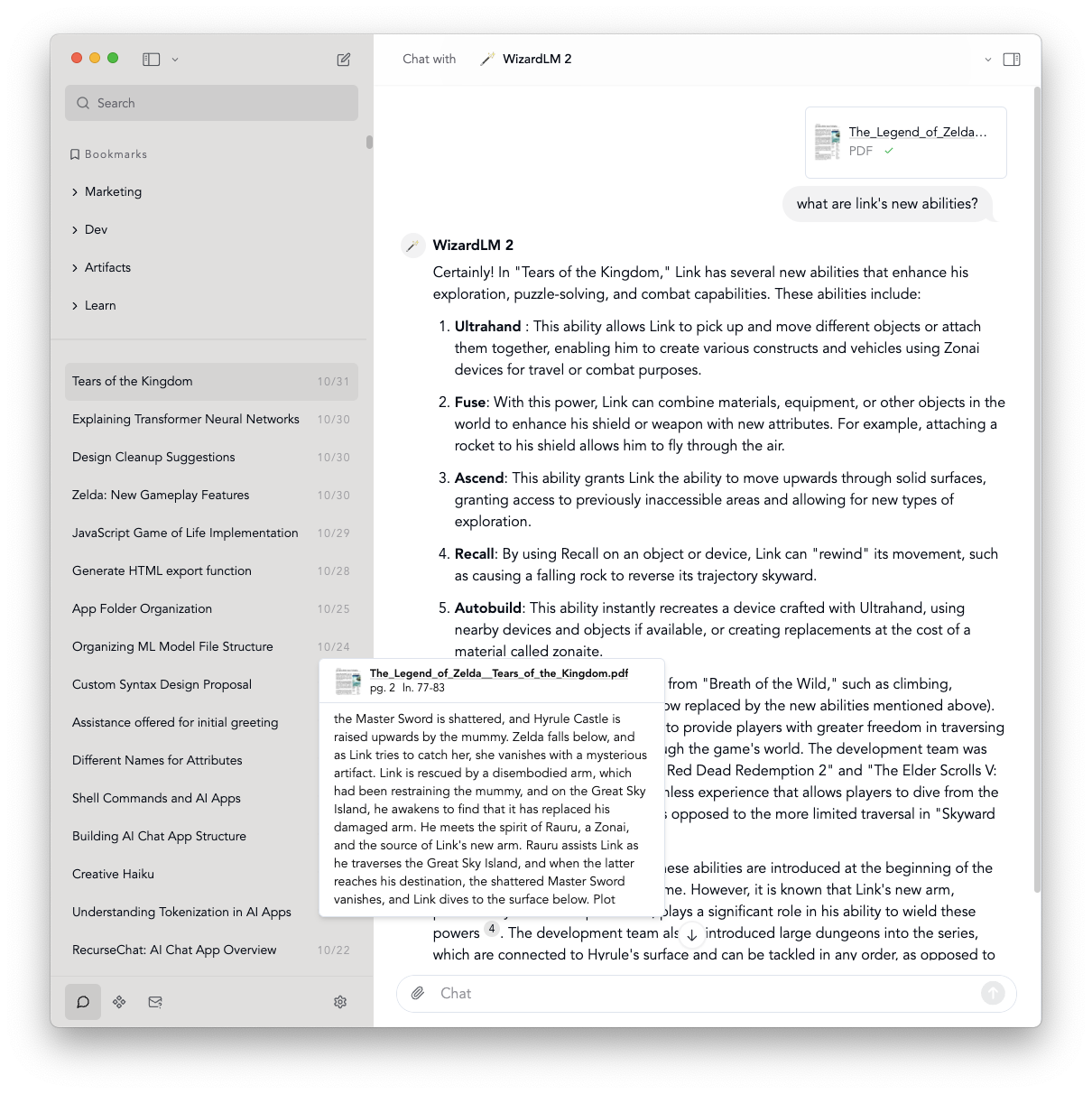

RecurseChat allows you to chat with PDF files using local AI models or AI providers of your choice. You can drag and drop PDF files onto the chat window or use the file picker to select PDF files.

When you chat with a PDF file, RecurseChat will process the PDF content and make it available for the AI model to reference during the conversation. You can ask questions about the PDF content, and the AI will provide relevant responses based on the information contained in the document.

Features

- Drag and drop PDF files directly into the chat

- Use local AI models or cloud providers (OpenAI, Anthropic) for PDF analysis

- Ask questions about specific sections or the entire document

- Multiple PDFs supported in a single chat session

- Works in both main window and floating chat window

Using Chat with PDF

- Start a new chat session

- Drag and drop a PDF file into the chat window, or click the attachment button to select a file

- Start asking questions about the PDF content

- Wait for the PDF to be processed if it hasn’t already been processed

The AI will reference the PDF content to provide accurate and contextual responses to your queries.

Hover over the citation marks of the AI’s response to see the specific sections of the PDF that were used as citations. This helps you verify the accuracy of responses and find relevant parts of the document quickly.

Supported Models

You can use any of our supported models for PDF chat:

- Local AI models (Llama, Mistral, etc.)

- OpenAI models (with API key)

- Anthropic Claude models (with API key)

Each model may handle PDF content differently, so feel free to experiment with different models to find the one that works best for your needs.

Common quetions

How does Chat with PDF work?

We’ve written a detailed blog post about how Chat with PDF works under the hood.

In short, RecurseChat uses Retrieval Augmented Generation (RAG) to enable PDF chat. The PDF is first parsed into text chunks. These chunks are then embedded and stored in a local vector database. When you chat, your query is augmented with relevant context from the document based on embedding similarity, then passed to your chosen chat completion model (either local or cloud-based) for generating responses.

Can I chat with PDF offline?

If you use a local AI chat model, you can chat with PDF completely offline without API fees.